Integrate ChatGPT into your business with Scorchsoft's AI app development. Enhance capabilities, automation, and personalisation with AI tools like GPT, Bard, and Claude.

A data lake gives you a durable, scalable home for all your organisation’s data — apps, portals, third-party platforms, devices, telemetry, exports — without needing the perfect reporting model on day one. Scorchsoft is a UK Team of Data Lake Developers

You’ve probably got data scattered across apps, portals, third-party platforms and spreadsheets, and every time you want a simple answer you end up debating which system is “the truth”.

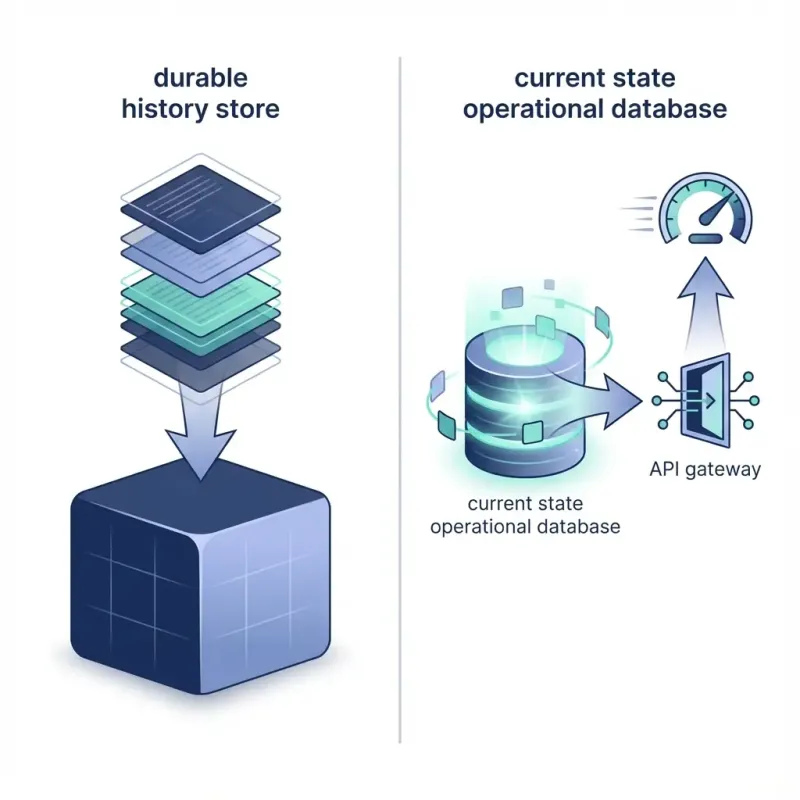

A data lake is your organisation’s long-term memory: it stores raw and historical data cheaply, at scale, so you can replay, reprocess, audit, and analyse over time. The common misunderstanding is treating it like an app database. You don’t point your mobile app at object storage and hope for the best — you keep “current state” in a fast operational store, and you treat the lake as the durable record.

In practice, “lake first” is a principle about durable history and reproducibility, not a literal wiring diagram where every live request must hit the lake before anything else. A data lake gives you durable history you can trust, without accidentally turning it into a slow, fragile substitute for your operational database.

You want something that’s secure, scalable and cost-sane on AWS (and you don’t want a science project that only one engineer understands).

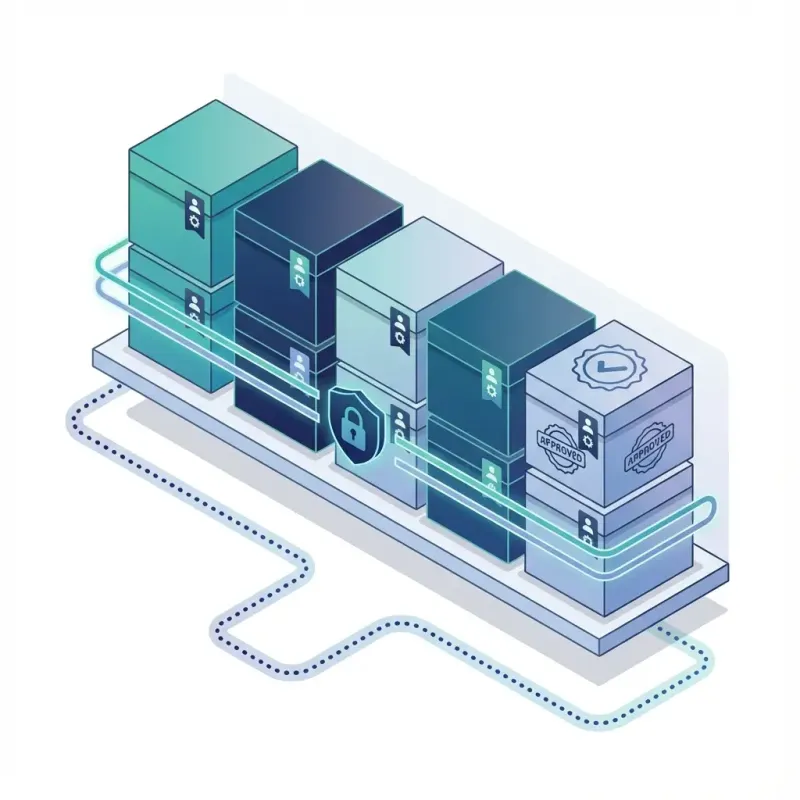

For AWS projects, we typically build an S3-based lake foundation with a catalog and strong access controls, then add ingestion patterns that suit the data type (batch extracts vs streaming telemetry). A practical baseline looks like this:

The right baseline architecture makes onboarding new sources predictable, keeps permissions auditable, and stops “quick wins” becoming long-term mess.

Most data lake projects don’t fail on storage — they fail when ingestion gets brittle, silent gaps appear, and suddenly Finance is missing Tuesday. You need ingestion patterns that match your reality (batch, streaming, CDC) so you can replay, recover, and evolve without breaking everything.

Different data needs different ingestion patterns, and this is where a lot of teams accidentally build something fragile. We normally choose from a small set of proven approaches:

We design for replay and failure: if one sink is down (e.g., warehouse load), the backbone lets you catch up later — otherwise you end up with silent gaps and awkward “why is Finance missing Tuesday?” meetings.

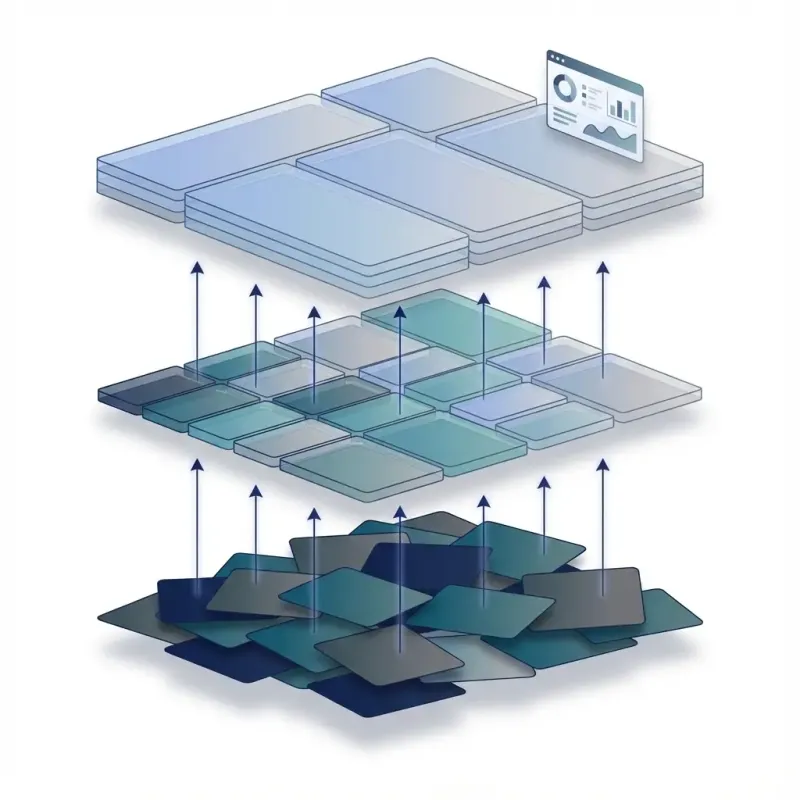

Raw data is not automatically useful, and “we dumped it in the lake” isn’t the same as “you can trust the numbers”. You need clear layers so you can keep evidence (Bronze), create reliable cleaned data (Silver), and publish business-ready datasets (Gold) without constant arguments about definitions.

A lake works when you separate “we captured it” from “we trust it”.

We don’t promote data “because it exists”; we promote it when a real user/report/product feature needs it — that’s how you avoid the classic data swamp.

The wrong file formats and “millions of tiny files” quietly turn your analytics bills into a bad joke and your queries into a waiting game. You want curated datasets that stay fast and cheap to query as you scale (instead of becoming a cost and performance trap).

Most sources arrive as JSON (or CSV). That’s fine for a raw landing zone because it preserves fidelity, but it’s not what you want to query forever. For curated datasets, we typically convert to columnar formats like Parquet so analytics engines can scan less, compress more, and query faster.

We also design around “small files” early (buffering, batching, compaction) because nothing ruins an analytics platform faster than millions of tiny objects and unpredictable query costs.

If everyone can create their own version of the data, trust collapses and your teams stop using the platform. You need governance that feels practical — clear ownership, access controls, retention, and naming — so people move faster without arguing about whose dataset is “correct”.

The quickest way to break trust is letting “anyone dump anything into the lake”. It feels flexible, but it creates duplicated datasets, unclear ownership, and endless debates about which numbers are real.

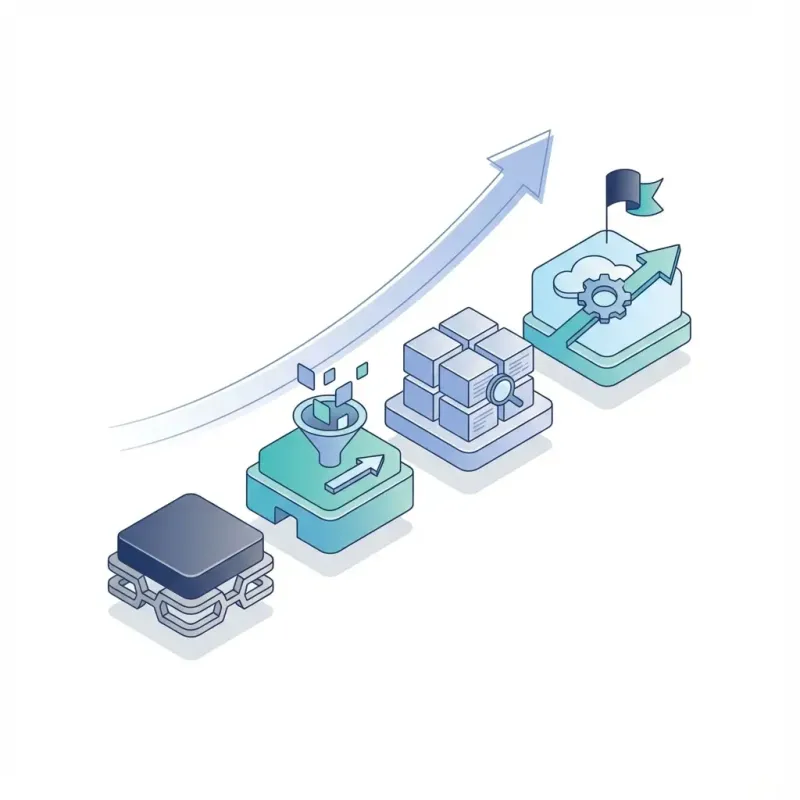

You don’t want a six-month “platform build” that delivers nothing your teams can use (and then gets quietly abandoned). You want quick, tangible outputs tied to real questions, with the foundations built in a way that makes the next 10 use cases easier, not harder.

We normally start by agreeing the first 3–5 business questions (the portal needs to answer X, the ops team needs Y, leadership needs Z). Then we build the lake foundation, onboard the highest-value sources, and deliver one or two “gold” outputs quickly (a portal view, a KPI dashboard, or an investigation-ready dataset). After that, we expand deliberately.

Scorchsoft can help you shape the right data lake approach for your organisation. That starts with identifying the sources you need to ingest (apps, portals, third-party platforms, devices, telemetry, exports), the questions you want to answer, and the datasets that will actually drive value for reporting, compliance, and AI.

We can support the technical delivery end-to-end: designing ingestion pipelines, setting up storage and governance, implementing security and access controls, building data quality checks, and curating “gold” datasets that stay consistent over time. We’ll also help you publish the data in a way your teams can use easily (dashboards, investigations, analytics, ML) without slowing down your operational systems.

Contact Scorchsoft if you need help delivering a robust, scalable data lake that turns messy data into something your business can trust.

Is a data lake the same as a data warehouse?

No — a lake stores raw/historical data flexibly; a warehouse stores curated, structured data optimised for fast reporting and consistent metrics.

Will my app read from the data lake?

Usually no. Apps need low-latency “current state” reads; the lake is for durable history, replay, audit, and analytics.

How do you avoid a “data swamp”?

Ownership + purpose + lifecycle: we only promote data beyond raw when there’s a consumer, a named owner, and a retention reason.

What’s Bronze/Silver/Gold actually for?

It separates “captured” from “trusted”: raw evidence, cleaned datasets, and business-ready definitions.

Do we need streaming ingestion?

Not always. Many projects start with API/export ingestion for quick wins, then add streaming where it genuinely adds value.

How do you handle schema changes over time?

We design for schema evolution (additive changes, versioned datasets for breaking changes, and curated contracts for BI).

Can you do Azure or Google Cloud instead?

Yes — the patterns stay the same (durable storage + catalog + governance + curated layers + operational serving store); only the managed services change.

Who owns the data and definitions?

Your business owns meaning and priorities; we implement the platform, patterns, and guardrails (so you don’t inherit a black box).

We would love to hear about your project. Please contact us, and share your goals; we'll respond with our thoughts and a rough cost estimate.

Scorchsoft is a UK-based team of web and mobile app developers and designers. We operate in-house from Birmingham, and our offices are located in the heart of the Jewellery Quarter.

Scorchsoft develops online portals, applications, web apps, and mobile app projects. With over fifteen years experience working with hundreds of small, medium, and large enterprises, in a diverse range of sectors, we'd love to discover how we can apply our expertise to your project.